Abstract

In 2006, approximately 1.3 million peer-reviewed scientific articles were published, aided by a large rise in the number of available scientific journals from 16 000 in 2001 to 23 750 by 2006. Is this evidence of an explosion in scientific knowledge or just the accumulation of wasteful publications and junk science? Data show that only 45% of the articles published in the 4500 top scientific journals are cited within the first five years of publication, a figure that is dropping steadily. Only 42% receive more than one citation. For better or for worse, “Publish or Perish” appears here to stay as the number of published papers becomes the basis for selection to academic positions, for tenure and promotions, a criterion for the awarding of grants and also the source of funding for salaries. The high pressure to publish has, however, ushered in an era where scientists are increasingly conducting and publishing data from research performed with ‘questionable research practices’ or even committing outright fraud. The few cases which are reported will in fact be the tip of an iceberg and the scientific community needs to be vigilant against this corruption of science.

The foundation of scientific clinical practice is evidence-based medicine. This is built largely on scholarly publications in peer-reviewed journals and, to a smaller extent, on podium presentations at reputable conferences. A peer-reviewed published paper holds a place of esteem in the scientific world. The year 2006 saw the publication of 50 million scholarly publications in peer-reviewed journals.1 This landmark calls for an introspection and evaluation of the process of publication and what it means for science.

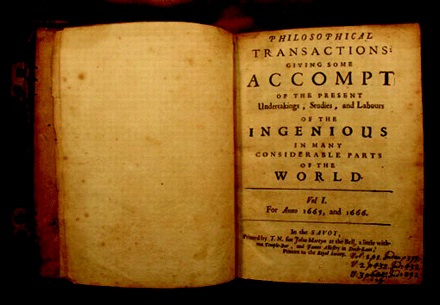

The first scientific papers to be published were probably in Le Journal des sçavans from Paris and the Philosophical Transactions of the Royal Society of London (Fig. 1), both in the year 1665. The cover page of the latter declaresthe purpose of the journal to be ‘Giving some accompt of the present undertakings, studies, and labours of the ingenious in many considerable parts of the world’. Even then, it was obvious that the published paper was meant to be the molecular unit of research communication. However, over the years, the published scientific paper assumed an even greater importance and became the measure of a researcher’s excellence. Publishing then became compulsory for survival and progress and the phrase “Publish or Perish”, first coined by HJ Coolidge (Fig. 2) in 1932,2 became a reality. The number of published papers became the basis for selection to academic positions, for tenure and promotions, the criterion for the awarding of grants and also the source of funding for salaries. It is now common to find many heads of departments, senior and even middle-level researchers publishing as many as 30 publications in a single year. This is astonishing as it implies one fresh idea conceived, a research methodology planned, research work executed, results written and published every fortnight; an impossible task for even the most brilliant researcher.

Fig. 1

Cover of thePhilosophical Transactions of the Royal Society of London, dating from 1665, which is widely considered to be the first published scientific paper.

Fig. 2

Harold Jefferson Coolidge (1904 to 1985) who is said to have coined the phrase “Publish or Perish”. Reproduced with permission from IUCN Photo Library © United Nations.

Explosion of knowledge or just junk science?

In 2006 alone, approximately 1.3 million peer-reviewed scientific articles were published, aided by a large rise in the number of available scientific journals from 16 000 in 2001 to 23 750 by 2006.3 This avalanche of papers raises a question. Are we witnessing an explosion of scientific knowledge or just the accumulation of wasteful publications and junk science? The value of a published paper is commonly measured by its citation index. However, data show that only 45% of the articles published in the 4500 top scientific journals are cited within the first five years of publication, a figure that appears to be dropping steadily.4 Only 42% receive more than one citation. Add to this the woe of self-citation, which accounts for between 5% and 25% of all citations,5 and it appears that most published papers are inconsequential to science and simply pad the curricula vitae of researchers.

The increased number of published papers may in some cases be related to dubious research practices such as salami slicing, whereby basic research is split into many fragments that allow individual publication. This is the concept of the so-called least publishable unit. Researchers may at times also publish the same material in different journals with different key words, captions and co-author variation on each occasion, thus making detection difficult by database scanners. Plagiarism, for example, is so rampant, that it appears many authors follow the quote attributed to Wilson Mizner, “If you steal from one author, it’s plagiarism; if you steal from many, it’s research.”6 Plagiarism and autoplagiarism, dual publication by the same author in the same or different language, are becoming so common that special programmes to identify the techniques are increasingly used by editors.7,8

The increasing numbers game has led to the procedure of evaluating an article by a citation index as well as an H-index for the author. This, in turn, has led to citation fever and the development of many innovative techniques to improve the citation index of an article. The most common is self-citation where the first paragraphs of an Introduction and Discussion are dedicated to the citation of the author’s previous articles. Scientists can also form a mutual-citation clubwhere they can improve their own and their friends’ citation index, by mutually citing each other’s work. There is also the opposite problem where researchers neglect to quote important work by their competitors in order to reduce their opponent’s citation index; this is selective citation amnesia. Many indices are open to manipulation, using techniques that are widely practised.

Research and publishing misconduct

The frequency with which questionable practices are detected and reported is becoming more common and potentially jeopardising the sanctity of research. Such behaviour can form a spectrum of activities such as simple carelessness, bias, adjusting data to improve results, selective reporting, falsification of data and outright fraud. Although in many cases it is difficult to differentiate between scientific carelessness and wilful fraud, the intention to purposefully deceive is the key difference. Going by this definition, it appears that fraudulent scientific behaviour is more common than was once thought. In a systematic review and meta-analysis of survey data into how many scientists fabricate and falsify research, it was alarming to note that while only 1.97% of scientists admitted to having fabricated, falsified or modified data or results at least once, a serious form of misconduct by any standards, up to 33.7% admitted to other questionable research practices.9 In a sample of post-doctoral fellows at the University of California, San Francisco, 3.4% admitted they had modified data in the past and 17% were willing to select or omit data to improve their results.10 Meanwhile 81% were willing to select, omit or fabricate data to win a grant or publish a paper.11 Three surveys documented scientists admitting to modifying or altering research data in order to make their research more impressive. Although many researchers did not consider data improvement as falsification, the hazy boundary between right and wrong, while biasing results towards a desired outcome, is increasingly crossed by many researchers.12-14 As most of the surveys were based on anonymous self-reporting, the actual frequency of research misconduct may be even higher. Human behaviour shows that those responsible for acts of misconduct are likely to commit them more than once. This perhaps implies that the rate of misconduct may be very high among medical practitioners and researchers.

The problem is likely to be more common in the area of so-called grey science, scientific material presented from the podium, or presented in posters at prestigious conferences. These are now published as abstracts in many journals and thus indirectly obtain the sanctity of peer-reviewed publication. In financially troubled times, many conferences aim to achieve higher attendance by accepting more papers, at least at the poster level. Consequently, the peer-review process may not be as rigorous as it once was. The high level of questionable research practices possible in papers presented at meetings is of obvious concern.

The published paper is no longer sacred

The peer-review process, while struggling to maintain quality, frequently fails to maintain scientific integrity. Honesty, good faith and good research practices, the cornerstones of science, cannot be policed or verified by reviewers. The increasing number of retractions witnessed in recent years shows evidence of this.

Retraction Watch,15 a blog that tracks scientific retractions, has documented more than 250 retractions over a 16-month period, prompting them to declare 2011 the ‘Year of Retraction’. Retractions have increased 15-fold in the past decade.16 While many retractions may be due to poor research practices such as failing to obtain ethical committee approval, some may be the result of falsification and fabrication of data. A recent and extreme example, which has shaken the scientific world, is that of the South Korean stem-cell researcher Woo-Suk Hwang. The case stands as a perfect example of research misconduct and highlights the woes of modern-day scientific publishing. Hwang et al published two highly cited papers in Science in 2004 and 2005,17,18 which reported the concept of therapeutic cloning in humans. In the first paper, the researchers claimed isolation of the first human embryonic stem cell line derived from somatic cell nuclear transfer and in the second they reported refinement of the process, which made clinical application possible. Hwang soon acquired the status of a national hero. However, it rapidly became obvious that not only had unethical practices been adopted in obtaining egg donors but DNA finger-printing of the cell lines showed the entire scientific content to be fabricated and untrue. Although Science retracted the articles, these events exposed the fact that researchers can fabricate data with ease and achieve publication in respected journals.

This case highlights additional, important ethical considerations. Hwang was forced to resign from Seoul National University in December 2005 and was officially dismissed in March 2006. Nevertheless, he remained active in the research field and successfully submitted three manuscripts on behalf of his university in the same year. These were subsequently published. Currently he has resumed research in a private facility in South Korea and further papers have appeared under his name.

This raises an interesting ethical issue of whether a researcher with a proven record of scientific fraud can be trusted to publish in areas of high importance. It is perhaps time for the scientific community to decide if authors should be prevented from submitting manuscripts to scientific journals once clear fraud and fabrication have been established.

Peer review needs review

There is a strong suggestion that the current review procedures into fraud and data manipulation, and the efficacy of the peer-review process, need evaluation. The peer-review process is thought to have started when the Royal Society of London published Philosophical Transactions in 1665.19,20 Historically, the process is considered to serve as the goalkeeper of scientific quality but not of scientific integrity. If the review process is the gateway for quality, then it is important to have high quality reviewers. The entire process is under strain as there are too many journals seeking too few reviewers who have the time, expertise and willingness to provide quality reviews. Most reviewers have no training in the fundamentals of good reviewing while real experts in any field are also overburdened with many other duties such as teaching, mentoring students, their own research, and administration, to review regularly. They are often forced to ask their junior colleagues, sometimes even their PhD students, to help. Studies have shown that many reviewers of even important journals are unable to distinguish between what is good and what is excellent.21 A review of the editorial processes for a number of journals – Cardiovascular Research,22Radiology,23 and the Journal of Clinical Investigation24 – has exposed poor agreement between different reviewers of the same manuscript. In practice, it is frequently found that what is assessed as excellent by one reviewer may be considered unworthy of publication by another. Submitted manuscripts that are rejected by one journal will often be published elsewhere, thereby obtaining the status of a peer-reviewed scholarly publication.

Is there a solution?

For this process to change, the system of rewards must change. Applicants to important posts must be assessed on the basis of their best four or five papers rather than enumerating all their publications. This might dissuade young researchers from joining the treadmill of the numbers game and encourage focused publishing of high-quality research instead. A single article with a high citation index in a high impact factor journal should be of greater value than five or six articles in less frequently cited journals. The length of the papers might also be reduced, as is seen with Nature and Science. Meanwhile libraries should be encouraged to cease their subscriptions to journals that do not register a good impact. The process of review and method of publication of journals must undergo scrutiny and necessary change. In addition, methods to detect fraud and identify questionable research practices must be strengthened and perhaps be separated from the review process.

Of course, a lasting solution might come from reforms within. Young investigators must be taught to appreciate good research morals as well as sound methodologies. Professors must teach by example and build a research environment that will foster good research practices. Presently, most researchers look at paper publishing not as a means to share and disseminate their work but as a method for professional advancement or as a means to obtain a grant. It is time that the research community recognised that the few cases that have been exposed represent the tip of an iceberg. The scientific community must look at a means of internal cleansing. Otherwise the very foundations of evidence-based medicine will be destroyed.

1 Abbott A , CyranoskiD, JonesN, et al.Do metrics matter?Nature2010;465:860–862. Google Scholar

2 Coolidge HJ ed. Archibald Cary Coolidge: Life and Letters. Books for Libraries: United States, 1932, p 308. Google Scholar

3 Björk B, Roos A, Lauri M. Scientific journal publishing: yearly volume and open access availability. Information Research 2009;14. http://informationr.net/ir/14-1/paper391.html (date last accessed 20 June 2012). Google Scholar

4 Bauerlein M, Gad-el-Hak M, Grody W, McKelvey B, Trimble S. We must stop the avalanche of low-quality research. The Chronicle of Higher Education, June 13 2010. http://chronicle.com/article/We-Must-Stop-the-Avalanche-of/65890/ (date last accessed 20 June 2012). Google Scholar

5 Thomson Reuters. Journal self-citation in the journal citation reports. http://thomsonreuters.com/products_services/science/free/essays/journal_self_citation_jcr/ (last accessed 25 June 2012) . Google Scholar

6 No authors listed. Wilson Mizner personal quotes. http://www.imdb.com/name/nm0594594/bio#quotes (date last accessed 20 June 2012). Google Scholar

7 McGee G . Me first! The system of scientific authorship is in crisis. Two new rules could help make things right. The Scientist: Magazine of the Life Sciences2007;21:28. Google Scholar

8 Butler D . Copycat trap. Nature2007;448:633.PubMed Google Scholar

9 Fanelli D . How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS One2009;4:5738.CrossrefPubMed Google Scholar

10 Eastwood S , DerishP, LeashE, OrdwayS. Ethical issues in biomedical research: perceptions and practices of postdoctoral research fellows responding to a survey. Sci Eng Ethics1996;2:89–114.CrossrefPubMed Google Scholar

11 Kalichman MW , FriedmanPJ. A pilot study of biomedical trainees’ perceptions concerning research ethics. Acad Med1992;67:769–775. Google Scholar

12 Smith R. What is research misconduct? In: White C, ed. The COPE Report 2000: Annual Report of the Committee on Publication Ethics. London: BMJ Books, 2000:7-11. (http://publicationethics.org/static/2000/2000pdfcomplete.pdf (date last accessed 20 June 2012). Google Scholar

13 De Vries R , AndersonM, MartinsonB. Normal misbehaviour: scientists talk about the ethics of research. J Emp Res Hum Res Ethics2006;1:43–50. Google Scholar

14 Lynöe N , JacobssonL, LundgrenE. Fraud, misconduct or normal science in medical research: an empirical study of demarcation. J Med Ethics1999;25:501–506. Google Scholar

15 No authors listed. Retraction watch. http://retractionwatch.wordpress.com/ (date last accessed 15 June 2012). Google Scholar

16 Van Noorden R . Science publishing: the trouble with retractions. Nature2011;478:26–28.CrossrefPubMed Google Scholar

17 Hwang WS, Ryu YJ, Park JH, et al. Evidence of a pluripotent human embryonic stem cell line derived from a cloned blastocyst. Science 2004;303:1669-1674. Retraction in Kennedy D Science 2006;311:335-335. Google Scholar

18 Hwang WS, Roh SI, Lee BC, et al. Patient-specific embryonic stem cells derived from human SCNT blastocysts. Science 2005;308:1777-1783. Retraction in Kennedy D Science, 2006;311:335-335. Google Scholar

19 Kronick DA . Peer review in 18th-century scientific journalism. JAMA1990;263:1321–1322.PubMed Google Scholar

20 Burnham JC . The evolution of editorial peer review. JAMA1990;263:1323–1329.PubMed Google Scholar

21 Wilson JD . Peer review and publication. J Clin Invest1978;61:1697–1701. Google Scholar

22 Opthof T , CoronelR, JanseMJ. The significance of the peer review process against the background of bias: priority ratings of reviewers and editors and the prediction of citation, the role of geographical bias. Cardiovasc Res2002;56:339–346.CrossrefPubMed Google Scholar

23 Siegelman SS . Assassins and zealots: variations in peer review: special report. Radiology1991;178:637–642. Google Scholar

24 Scharschmidt BF , DeAmicisA, BacchettiP, HeldMJ. Chance, concurrence, and clustering: analysis of reviewer's recommendations on 1,000 submissions to The Journal of Clinical Investigation. J Clin Invest1994;93:1877–1880. Google Scholar